The Revolutionary Arrival of AI – generated Games

The Disruptive GameNGen and the New Milestone

In the world of technology, we are constantly witnessing astonishing advancements. Just two months ago, our perception of AI games was revolutionized by Google’s GameNGen. They achieved a historic breakthrough, eliminating the need for game engines. AI, based on diffusion models, can now generate real – time playable games for players. GameNGen’s emergence signaled a new era, suggesting that developers no longer need to code manually, potentially disrupting the entire $200 – billion – dollar global gaming industry. Any type of game can be customized to fit a player’s unique desires, creating a personalized world. This idea quickly spread throughout the AI community. At that time, aside from “Black Myth: Wukong,” the hottest topic in the gaming world was the bold statement by Hao – yu Cai, the founder of miHoYo: “AI will disrupt game development. Most game developers should consider changing careers.”

Surprisingly, just two months later, a new milestone has arrived. AI – generated games in real – time are no longer just demos; they are now playable. Yesterday, two start – up companies, Etched and Decart AI, joined forces to present Oasis, the world’s first real – time AI – generated game. Every frame you experience in this game is the result of real – time prediction by diffusion models. The game screen is continuously rendered in real – time at 20 frames per second with zero latency. More importantly, all the code and model weights are open – sourced.

The Astonishment and Expectations of the Public and Experts

The ability of AI to accurately simulate high – quality graphics and complex real – time interactions has caught everyone off guard. Viewers online were left in shock, wondering if they had somehow entered the Matrix from “The Matrix” movie. Even experts in the AI field are closely watching Oasis. Prominent figures like Tri Dao, an assistant professor at Princeton and the author of FlashAttention, have praised it, stating, “Soon, model inference will become very inexpensive, and much of our entertainment content will be generated by artificial intelligence.”

Playing the First – ever AI – generated Game: A Mixed Experience

Initial Anticipation and the Start of the Game

However, as it’s a game, we need to evaluate it based on gaming standards. Without further ado, we jumped right into playing it. As expected, it’s crucial to remember that this is the world’s first real – time AI – generated game. Upon entering the interface, Oasis warns, “Please note that every step you take will determine the direction of the entire world.” This immediately raises the excitement level. The game’s content can be self – shaped in real – time, meaning that every action in this world revolves around the player. Players no longer need to follow fixed patterns or tasks because every second brings a customized surprise from AI. You can start the experience by choosing from terrains like coasts, villages, forests, or deserts. (Due to Oasis’ popularity, there’s a queue to play, and with limited computing power, each user has a five – minute time limit.)

The Similarities and Peculiarities during Play

Finally, when we got in, the game Oasis was a bit confusing. It resembled “Minecraft” a lot. It even had a similar feel to “Monster Hunter Now.” Many players wondered, “Is there no copyright issue with this?” Many who tried it shared the sentiment: “Tell AI: Make a game based on Minecraft and just change the UI.” But when building structures like in “Minecraft,” the cow shed generated by Oasis was quite impressive. After all, this game is driven not by preset logic and programs but by an AI model. A simple action like placing a fence next to another involves complex processes that the model quietly handles, such as identifying the fence you clicked, determining how it should be arranged with other objects, and how the scene should be presented.

However, compared to its prediction ability, memory seems to be a weakness of Oasis. For example, if there was a mountain on the left side of the screen, when you look back, it might be gone. Unlike other video models like Sora that aim to simulate the physical world, there’s no such obvious memory loss when the “camera” is panned back. Some netizens speculate that parameter quantity might have been sacrificed for real – time inference speed. Although the game is officially claimed to have zero – latency operation, it’s a bit difficult to control with the mouse, as if there’s an invisible force affecting the connection between the mouse and the computer. When trying to click on an item in a certain slot of the backpack, it often selects another slot. Also, the text in the game has a dreamy, hazy quality, as if it has an outline but is hard to make out clearly. One player described it aptly: “At first, I thought it was Minecraft, but after trying it, it’s like playing Minecraft after eating magic mushrooms.”

The Technical Marvels behind Oasis

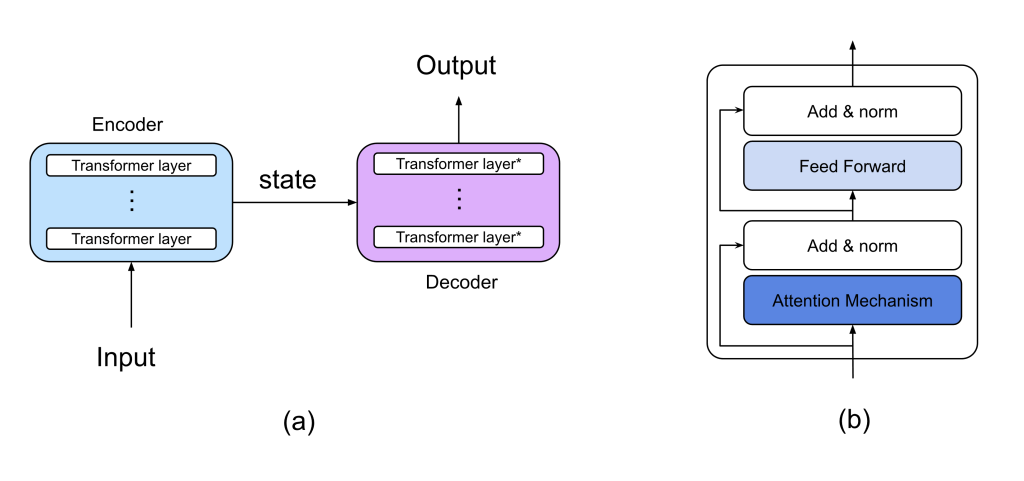

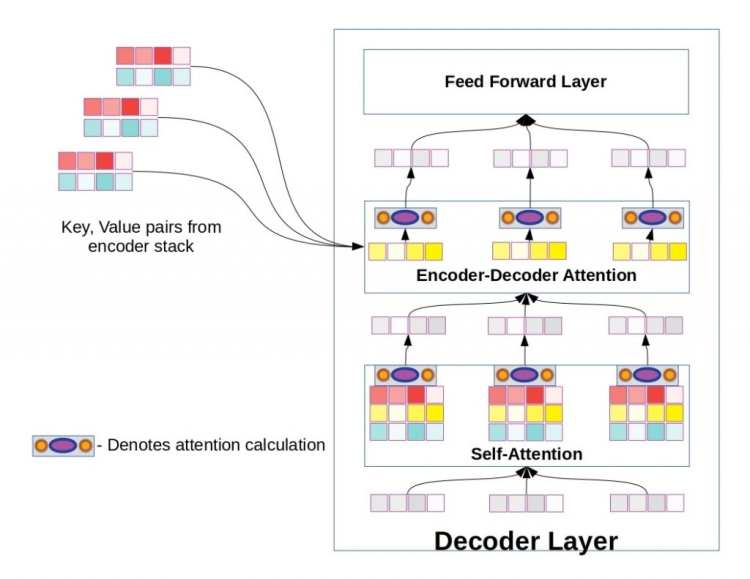

The Architecture of the Model

As the technical support for Oasis, both Etched and Decart AI have released technical blogs. Decart AI is mainly responsible for training the model, while Etched provides computing power. The model consists of two parts: a spatial autoencoder and a latent diffusion model structure. Both are based on the Transformer model: the autoencoder is based on ViT, and the backbone is based on DiT. Unlike recent action – based world models like GameNGen and DIAMOND, the Oasis research team chose the Transformer to ensure stable and predictable scaling. Unlike bidirectional models like Sora, Oasis generates frames autoregressively and can adjust each frame based on game input, which forms the basis for the real – time interaction of the AI – generated game with the world. The model adopts the Diffusion Forcing training method, which can denoise each token independently. It utilizes the context of previous frames by adding additional temporal attention layers between spatial attention layers. Additionally, the diffusion process occurs in the latent dimension generated by ViT VAE, which not only compresses the image size but also enables the diffusion to focus on higher – level features. Time stability is a concern for Decart AI – ensuring that the model’s output makes sense over long time spans. In autoregressive models, errors accumulate, and small flaws can quickly turn into incorrect frames. To address this, the team has innovated in long – context generation. Their chosen method is to dynamically adjust noise. During model inference, a noise plan will be implemented. Initially, noise is injected through diffusion forward – propagation to reduce error accumulation, and later, the noise is gradually removed to enable the model to discover and maintain high – frequency details in previous frames.

The Performance of the Model

The Oasis game generates real – time output at 20 frames per second. Currently, the most advanced text – to – video models with similar DiT architectures (such as Sora, Mochi – 1, and Runway) may take 10 – 20 seconds to create one second of video, even with multiple GPUs. However, to match the gaming experience, Oasis’ model must generate a new frame every 0.04 seconds at most, which is more than 100 times faster. With the optimized settings of the Decart inference stack, developers have significantly improved the operation and interconnection efficiency of the GPU, enabling the model to finally run at a playable frame rate, unlocking real – time interactivity for the first time. However, to make the model another order of magnitude faster and more cost – effective for large – scale operation, new hardware is needed. Oasis is optimized for the Transformer ASIC Sohu built by Etched. Sohu can scale to 100B + large – scale next – generation models with 4K resolution. Moreover, Oasis’ end – to – end Transformer architecture makes it highly efficient on Sohu, allowing it to serve more than 10 times the number of users even with 100B + parameter models. For generative tasks like Oasis, cost is clearly a hidden bottleneck in operation.

The Rising Stars: Etched and Decart AI

Etched’s Ambitious Journey

Etched might be an unfamiliar name, but it’s a new AI financing success story in Silicon Valley. Its two founders, Chris Zhu and Gavin Uberti, both born in 2000, bet on large models based on the Transformer architecture and decided to go all in. In 2022, they both dropped out of Harvard University to start their business, focusing on developing application – specific integrated circuits (ASICs) for Transformer models. In July this year, Etched released its first AI chip, Sohu, claiming, “For Transformers, Sohu is the fastest chip ever, with no rivals.” On the same day, Etched completed a $120 – million – dollar Series A financing round, attracting a group of Silicon Valley bigwigs and challenging NVIDIA. Compared to NVIDIA, a server integrated with 8 Sohu chips outperforms 160 H100s, with Sohu being 20 times faster than H100. Compared to NVIDIA’s most powerful new – generation B200, Sohu is more than 10 times faster and cheaper.

Decart AI’s Background and Achievements

Decart, an Israeli artificial intelligence company, has just made its official appearance. Along with the release of Oasis, there’s news that Decart has received $21 – million – dollar (approximately 150 – million – yuan) financing from Sequoia Capital and Oren Zev. Before launching Oasis, Decart’s main service was to build more efficient platforms to improve the speed and reliability of large models. Oasis might be a great start, and perhaps, based on this, we’ll be able to play new – form AI games in the near future.

Discussion about this post