Inside the Tech: How On-Device AI Is Learning From Your Photo Album

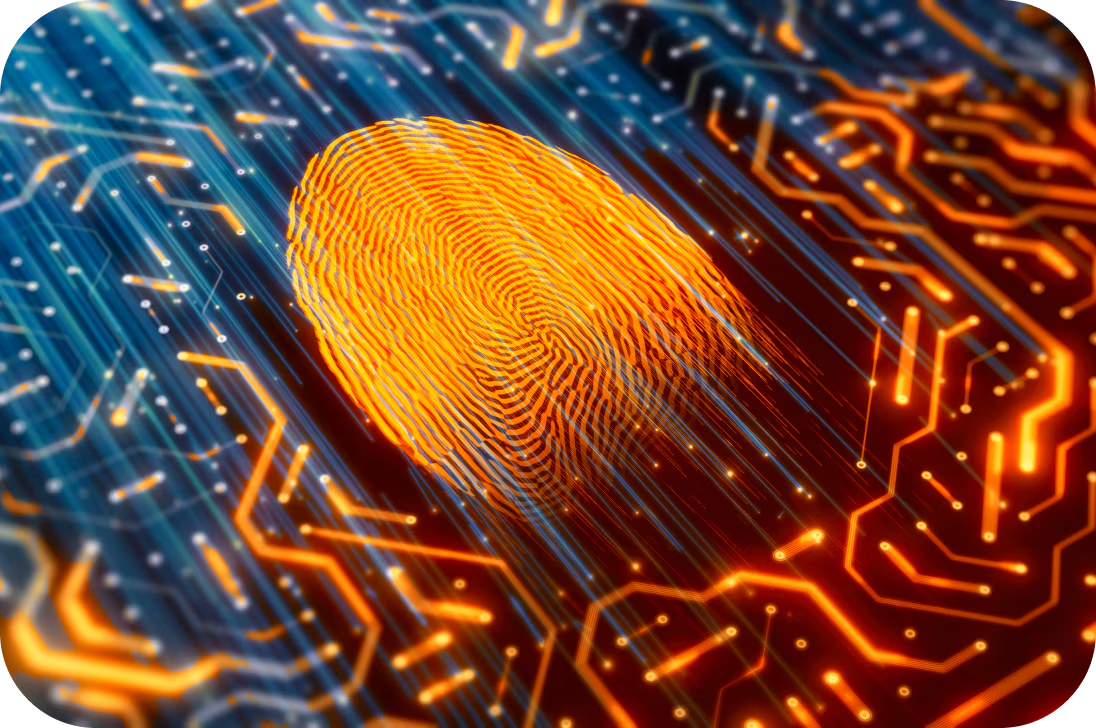

In 2025, smartphones have evolved from tools of communication to intelligent personal assistants powered by increasingly powerful edge AI chips. The latest flagship devices from Apple and Samsung boast advanced neural processing units (NPUs) that can perform billions of operations per second—right on the device. These chips were initially marketed as privacy-friendly alternatives to cloud-based AI, allowing features like real-time translation, facial recognition, and smart camera enhancements without uploading data to external servers. But recent disclosures and patent leaks reveal a darker side to this edge intelligence revolution.

According to multiple 2024–2025 patent filings from both Apple and Samsung, the NPUs in their latest smartphones are being used for more than just optimizing user experience—they are also running continuous background processes that analyze photo libraries to improve AI models. These models, anonymized and trained locally, are then partially uploaded in the form of “aggregated learning contributions” to centralized servers for global model updates.

In simpler terms, your phone is silently reviewing your entire camera roll—your selfies, travel pictures, food snaps, and screenshots—not just to tag or categorize them for your benefit, but to feed proprietary AI models. The process is known as federated learning, a decentralized machine learning technique that allows companies to train models on distributed data sets without collecting raw user data. However, critics argue that the lines between anonymization and exploitation are blurrier than advertised.

Internal engineering documents leaked earlier this year show that Apple’s “CoreSight” system and Samsung’s “PhotonAI” pipeline use image metadata, facial embedding vectors, and object segmentation data to refine features like person recognition, image search, and generative editing suggestions. These features constantly evolve through machine learning loops—many of which operate silently in the background, activated during idle or charging states.

The functionality is opt-out by default in most regions outside the EU. But even when technically anonymized, the sheer volume and intimacy of user data involved—millions of private photos being scanned and dissected for AI improvement—raises serious concerns about consent, control, and the definition of privacy in the AI era.

Privacy Under Siege: The EU Investigates AI Training Leak

The hidden processing of personal photos might have remained a technical footnote if not for a high-profile incident in early 2025. A whistleblower from an AI subcontractor working with multiple smartphone vendors leaked internal logs showing how supposedly anonymized image features could, in certain edge cases, be reverse-mapped to individual users. The whistleblower, who provided evidence to the European Data Protection Board (EDPB), alleged that certain compressed visual fingerprints—especially in cases involving unique environments or tattoos—could be traced back using cross-referenced datasets from social media and public image repositories.

The allegations triggered an immediate investigation by the European Union under the GDPR’s AI-specific Article 22 provisions, which regulate automated profiling and data usage. The probe was launched under the title “Project Obscura” and involved coordination between national data authorities in Germany, France, and the Netherlands. Investigators demanded transparency reports from Apple, Samsung, and Google, focusing specifically on how user photos were being processed on-device, what metadata was extracted, and what level of control users had over these processes.

While companies insisted that no identifiable data left the device and that contributions to central models were mathematically non-traceable, early findings from the EDPB suggested otherwise. In particular, the board cited issues with differential privacy thresholds, pointing out that edge devices varied widely in how strictly they enforced noise-injection protocols—some older phones reportedly operated under outdated firmware that didn’t even include those protections.

Civil society groups, including Privacy Frontline and Algorithmic Transparency Europe, launched campaigns calling for immediate suspensions of federated photo training until stronger regulations could be enacted. A petition demanding user-facing toggles and full transparency logs gained over two million signatures in less than a week. For many, the discovery felt like a betrayal: a photo that never left your phone could still be quietly contributing to a billion-dollar AI pipeline—without your informed consent.

Fighting Back: Filters That Fool the Algorithm

As awareness spreads, users are beginning to fight back—armed not with legislation, but with creativity. A new trend is emerging across online communities: adversarial filters designed to confuse AI systems. These are specially crafted image overlays that subtly distort pixel patterns in ways that are imperceptible to humans but disruptive to machine vision models.

Among the most downloaded tools are three open-source apps that apply such filters seamlessly to existing images:

1. FogFace: Developed by a group of German researchers, FogFace adds micro-patterns to photos that interfere with facial recognition algorithms. While a person’s face still looks natural to the human eye, NPUs struggle to identify facial landmarks, causing model training to fail or misclassify the subject entirely. This has made it a favorite among users concerned about biometric profiling.

2. CloakLens: Popular in France and the Netherlands, CloakLens targets object detection models. By injecting calculated perturbations into the photo background, it prevents the AI from accurately mapping environmental or contextual elements. A photo of a bookshelf might be interpreted as an abstract blur, while an image of a protest could register as “empty street.” The filter is a powerful tool for activists who want to document events without contributing to data-hungry AI ecosystems.

3. PrismShell: Designed in South Korea, PrismShell focuses on visual embeddings—the vectors used to describe the style and structure of an image. It adds controlled noise to texture layers, confusing generative AI tools that rely on embedding patterns for image prediction or synthesis. PrismShell has been adopted by artists and content creators wary of their aesthetic being unknowingly replicated by generative AI trained on their personal content.

All three filters come with disclaimers: they may reduce photo clarity slightly and can sometimes interfere with legitimate AI features like photo sorting or gallery search. But for users who prioritize digital autonomy, the trade-off is worth it. The rise of these tools signals a growing movement toward adversarial design—products created not just for beauty or utility, but as a form of resistance.

Redefining Consent in the Age of Smart Photos

The underlying issue at the heart of this controversy is not just technical—it is ethical and philosophical. Consent, in the traditional data privacy sense, was rooted in the act of sharing. You upload a photo, you click a checkbox, you understand the terms. But in the era of edge AI, consent is becoming ambient. It is embedded in firmware, buried in terms of service, and activated silently while your phone sits on a nightstand.

The industry’s defense has leaned heavily on “anonymization” and “user benefit.” After all, personalized AI features—like sorting albums by emotion or creating custom memory videos—rely on this training. But the fact remains: users were not clearly informed, nor given granular control, over what images were analyzed, how deeply, and for what future purposes.

Experts in AI ethics argue that this moment should serve as a reckoning. Just as the GDPR forced companies to rethink cookies and tracking, a new paradigm is needed for “invisible computation”—especially when that computation touches the most personal archive most people own: their photo gallery.

Potential solutions include mandatory opt-ins for local training processes, real-time logs of what data was used, and clearer interface prompts that give users the ability to exclude certain albums or content types. Some technologists are even proposing a new standard: Visual Data Consent Tokens (VDCTs), which would attach metadata to images flagging them as exempt from training, regardless of device settings.

Until such changes are widely adopted, users remain caught between the promise of smart personalization and the threat of silent exploitation. Your smartphone has become a dual agent—part helpful assistant, part passive researcher feeding corporate AI ambitions. And unless transparency catches up with technology, your most intimate digital memories may continue training machines you never agreed to support.

Discussion about this post