The Need for a New Video Understanding Benchmark

Limitations of Current Evaluation Benchmarks

The GPT – 4o April launch sparked a boom in video understanding, and the open – source leader Qwen2 also demonstrated its prowess in various video evaluation benchmarks. However, most current evaluation benchmarks have several flaws. They mainly focus on short videos, with insufficient video length or number of video shots, making it difficult to assess the model’s long – term sequential understanding ability. The evaluation of models is limited to relatively simple tasks, and many finer – grained capabilities are not covered by most benchmarks. Existing benchmarks can still achieve high scores with a single frame image, indicating weak sequential correlation between questions and video frames. The assessment of open – ended questions still uses the older GPT – 3.5, resulting in significant 偏差 between scoring and human preferences and inaccurate evaluations that often overestimate model performance. So, is there a benchmark that can better address these issues?

MMBench – Video: A New Hope

In the latest NeurIPS D&B 2024, a comprehensive open – ended video understanding evaluation benchmark, MMBench – Video, was proposed by Zhejiang University in collaboration with Shanghai AI Laboratory, Shanghai Jiao Tong University, and The Chinese University of Hong Kong. It also created an open – source evaluation list for the video understanding capabilities of current mainstream MLLMs.

The Superiority of MMBench – Video Dataset

High – Quality Dataset with Full – chain Coverage

The MMBench – Video evaluation benchmark for video understanding is fully manually annotated, undergoing primary annotation and secondary quality verification. It features a rich variety of high – quality videos, and the questions and answers comprehensively cover the model’s capabilities. Answering questions accurately requires extracting information across the time dimension, better assessing the model’s sequential understanding ability.

Distinctive Features of MMBench – Video

Compared with other datasets, MMBench – Video has several prominent features. It has a wide span of video durations and variable number of shots. The collected video lengths range from 30 seconds to 6 minutes, avoiding the problems of simple semantic information in very short videos and high resource consumption in evaluating very long videos. The number of shots in the videos has an overall long – tailed distribution, with a video having up to 210 shots, containing rich scene and context information.

A Comprehensive Test of All – round Abilities

A model’s video understanding ability mainly consists of perception and reasoning, and each part can be further refined. Inspired by MMBench and combined with the specific capabilities involved in video understanding, researchers have established a comprehensive capability spectrum containing 26 fine – grained capabilities. Each fine – grained capability is evaluated with dozens to hundreds of question – answer pairs, and it is not a simple collection of existing tasks.

Rich Video Types and Diverse Question – answer Languages

It covers 16 major fields such as humanities, sports, science and education, cuisine, and finance, with each field accounting for more than 5% of the videos. At the same time, the question – answer pairs have further improvements in length and semantic richness compared to traditional VideoQA datasets, not limited to simple question types like ‘what’ and ‘when’.

Good Temporal Independence and High – quality Annotation

In the research, it was found that most VideoQA datasets can obtain sufficient information from just one frame within the video to answer accurately. This may be because the changes between frames in the video are small, there are few video shots, or the quality of the question – answer pairs is low. Researchers call this poor temporal independence of the dataset. Compared with them, MMBench – Video has significantly lower temporal independence due to detailed rule – based restrictions during annotation and secondary verification of question – answer pairs, enabling better assessment of the model’s sequential understanding ability.

Performance Evaluation of Mainstream Multimodal Models

Evaluating Multiple Models on MMBench – Video

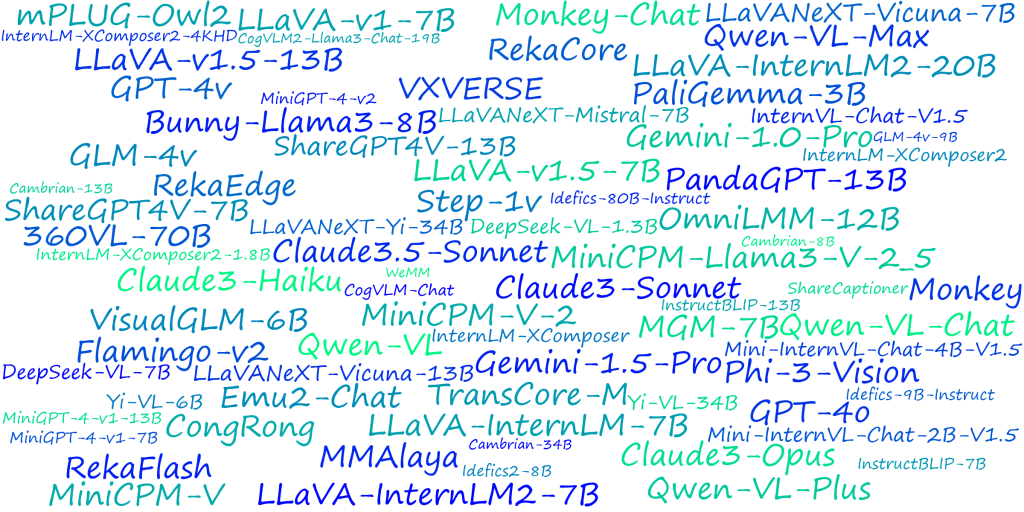

To more comprehensively evaluate the video understanding performance of multiple models, MMBench – Video selected 11 representative video – language models, 6 open – source image – text multimodal large models, and 5 closed – source models like GPT – 4o for comprehensive experimental analysis.

Surprising Results and Insights

Among all the models, GPT – 4o performs outstandingly in video understanding, and Gemini – Pro – v1.5 also shows excellent model performance. Surprisingly, the existing open – source image – text multimodal large models perform better overall on MMBench – Video than the video – question – pair – fine – tuned video – language models. The best image – text model, VILA1.5, outperforms the best video model, LLaVA – NeXT – Video, by nearly 40% in overall performance.

Reasons behind the Performance Differences

Further investigation reveals that the reason image – text models perform better in video understanding may be that they have stronger fine – grained processing capabilities when handling static visual information. Video – language models have deficiencies in static image perception and reasoning performance, and thus struggle when faced with more complex sequential reasoning and dynamic scenes. This difference reveals significant deficiencies in current video models’ spatial and temporal understanding, especially when handling long – video content, and their sequential reasoning ability urgently needs improvement. In addition, the performance improvement of image – text models in reasoning through multi – frame input indicates that they have the potential to further expand into the video understanding field, while video models need to strengthen learning in a wider range of tasks to bridge this gap.

Impact of Video Length and Shot Number on Model Performance

Video length and shot number are considered key factors affecting model performance. Experimental results show that as the video length increases, the performance of GPT – 4o with multi – frame input decreases, while the performance of open – source models such as InternVL – Chat – v1.5 and Video – LLaVA remains relatively stable. Compared with video length, the number of shots has a more significant impact on model performance. When the number of video shots exceeds 50, the performance of GPT – 4o drops to 75% of its original score. This indicates that frequent shot changes make it more difficult for the model to understand the video content, leading to performance degradation.

The Role of Subtitles and Audio Information

In addition, MMBench – Video also obtains subtitle information of videos through an interface, thereby introducing the audio modality through text. After introduction, the model’s performance in video understanding has been significantly improved. When audio signals are combined with visual signals, the model can answer complex questions more accurately. This experimental result shows that the addition of subtitle information can greatly enrich the model’s context understanding ability. Especially in long – video tasks, the information density of the speech modality provides the model with more clues to generate more accurate answers. However, it should be noted that although speech information can improve model performance, it may also increase the risk of generating hallucination content.

The Choice of Referee Model

In terms of referee model selection, experiments show that GPT – 4 has more fair and stable scoring capabilities, with strong anti – manipulation properties and scoring that is not biased towards its own answers, aligning better with human judgment. In contrast, GPT – 3.5 tends to have higher scores during scoring, leading to distorted final results. Meanwhile, open – source large – language models, such as Qwen2 – 72B – Instruct, also show excellent scoring potential, with outstanding alignment with human judgment, proving that they have the potential to become an efficient evaluation model tool.

One – click Evaluation with VLMEvalKit and the OpenVLM Video Leaderboard

MMBench – Video currently supports one – click evaluation in VLMEvalKit. VLMEvalKit is an open – source toolkit designed specifically for evaluating large visual – language models. It supports one – click evaluation of large visual – language models on various benchmark tests without the need for heavy data preparation, making the evaluation process more convenient. VLMEvalKit is applicable to the evaluation of image – text multimodal models and video multimodal models, supporting single – pair image – text input, interleaved image – text input, and video – text input. It implements more than 70 benchmark tests, covering multiple tasks including but not limited to image captioning, visual question answering, and image subtitle generation. The supported models and evaluation benchmarks are constantly being updated.

Based on the reality that the evaluation results of existing video multimodal models are relatively scattered and difficult to reproduce, the team has also established the OpenVLM Video Leaderboard, a comprehensive evaluation list for the video understanding capabilities of models. The OpenCompass VLMEvalKit team will continue to update the latest multimodal large models and evaluation benchmarks, creating a mainstream, open, and convenient multimodal open – source evaluation system.

Conclusion

In summary, MMBench – Video is a new long – video, multi – shot benchmark designed for video understanding tasks, covering a wide range of video content and fine – grained capability evaluation. The benchmark test contains more than 600 long videos collected from YouTube, covering 16 major categories such as news and sports, aiming to evaluate the spatio – temporal reasoning abilities of MLLMs. Different from traditional video – question – answer benchmarks, MMBench – Video makes up for the deficiencies of existing benchmarks in sequential understanding and complex – task processing by introducing long videos and high – quality manually annotated question – answer pairs. By using GPT – 4 to evaluate the model’s answers, this benchmark shows higher evaluation accuracy and consistency, providing a powerful tool for model improvement in the video understanding field. The introduction of MMBench – Video provides researchers and developers with a powerful evaluation tool, helping the open – source community to deeply understand and optimize the capabilities of video – language models.

Discussion about this post