Introduction

The rise of autonomous vehicles (AVs) has the potential to revolutionize the transportation industry, making travel safer, more efficient, and accessible. However, as artificial intelligence (AI) takes the wheel, it raises profound ethical questions: How can we trust these AI systems to make life-and-death decisions? How do we ensure fairness, transparency, and accountability in a system that relies heavily on machine learning and algorithms? As AVs become increasingly prevalent, addressing the ethical implications of AI decision-making is crucial for the future of transportation. This article explores the key ethical issues surrounding AI in autonomous vehicles and discusses how we can ensure these systems are safe, unbiased, and trustworthy.

1. Understanding Autonomous Vehicles and AI Decision-Making

1.1 The Technology Behind Autonomous Vehicles

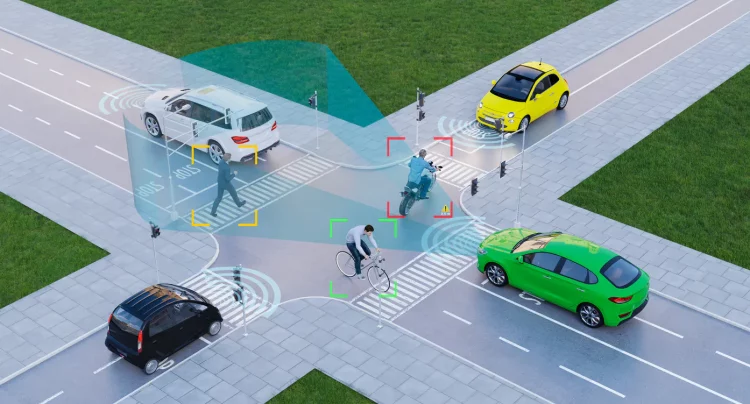

Autonomous vehicles rely on a complex combination of AI, machine learning, sensors, and data to operate without human intervention. These systems include:

- Lidar and radar: Used for detecting obstacles, other vehicles, and pedestrians.

- Cameras and computer vision: Help the vehicle interpret the environment, identify traffic signals, road markings, and signs.

- AI Algorithms: Machine learning algorithms process data from sensors, cameras, and other sources to make decisions about navigation, speed, and safety.

1.2 AI’s Role in Decision-Making

In autonomous vehicles, AI is responsible for making real-time decisions about navigation, speed, and, most importantly, how to react to potential hazards. The AI must constantly analyze the vehicle’s surroundings, interpret data, and decide the safest course of action. For example, if a pedestrian suddenly steps into the road, the AI has to decide whether to brake, swerve, or take another action, potentially involving complex ethical considerations.

2. Ethical Issues in Autonomous Vehicle AI

As AVs take on the responsibility of driving, they must make decisions that involve ethical dilemmas. The following are some of the key ethical challenges that arise in the realm of autonomous driving:

2.1 The Trolley Problem and Moral Decision-Making

One of the most discussed ethical dilemmas in autonomous vehicles is the “trolley problem,” a thought experiment in ethics. In this scenario, an autonomous vehicle must decide whether to:

- Swerve and hit one pedestrian, potentially saving multiple passengers in the vehicle.

- Stay on course and hit multiple pedestrians but protect the passengers inside.

This dilemma underscores the challenge of programming AVs to make moral decisions. AI systems may be faced with situations where there is no “perfect” choice. Should the vehicle prioritize the safety of its passengers over the safety of pedestrians, or vice versa? What criteria should the AI use to make such decisions?

2.2 Bias and Fairness in AI Decision-Making

Another critical ethical issue in autonomous vehicles is the potential for algorithmic bias. If AI systems are trained on biased data, they may make unfair or discriminatory decisions. For instance, if the data used to train the AI system does not sufficiently represent minority groups or vulnerable populations, the vehicle may have trouble detecting and responding to pedestrians with different skin tones, body sizes, or disabilities.

Ensuring that AI systems are fair and free from bias requires diverse and representative datasets. Moreover, it is essential that developers regularly audit and test AI systems for potential biases to prevent unfair outcomes.

2.3 Accountability and Liability

When an autonomous vehicle is involved in an accident, who is responsible? Is it the car manufacturer, the software developer, the owner of the vehicle, or the AI itself? The lack of clear legal frameworks and accountability in the case of an AV-related incident presents a significant ethical challenge.

It is critical to establish guidelines for assigning responsibility when things go wrong. Clear accountability systems need to be put in place to ensure that victims can seek justice and compensation, and that developers can be held accountable for faulty systems or unethical decisions.

2.4 Transparency and Trust in AI Systems

For the general public to trust autonomous vehicles, they need transparency in how AI systems make decisions. If an AI system decides to swerve and cause an accident, passengers, pedestrians, and society at large need to understand the reasoning behind that decision. Without transparency, AI-driven decisions may seem arbitrary or unjust.

Developers need to ensure that the decision-making processes of AVs are explainable and understandable. This would increase public trust and acceptance of the technology, helping to address the concerns about “black-box” AI systems that cannot be scrutinized.

3. Ensuring Ethical AI in Autonomous Vehicles

To address the ethical issues raised by AI in autonomous vehicles, developers, policymakers, and researchers must work together to create frameworks that ensure fairness, accountability, and transparency. Below are some strategies that can help ensure that AI systems in autonomous vehicles make ethical and trustworthy decisions.

3.1 Ethical Frameworks and Guidelines

One way to address ethical dilemmas in AVs is to develop ethical frameworks and guidelines for decision-making. These guidelines should provide clear rules on how AI should prioritize different factors—such as safety, fairness, and justice—when making decisions. International organizations, governments, and industry groups must work together to establish universally accepted ethical standards for AV development.

For example, an ethical framework could mandate that an autonomous vehicle should always prioritize human life over property damage or follow a set of principles that ensures the vehicle’s actions are in line with societal norms and expectations.

3.2 Bias Mitigation and Diverse Training Data

To avoid bias in AI decision-making, it is crucial to ensure that autonomous vehicle AI systems are trained on diverse and representative datasets. This will reduce the risk of discrimination against certain demographic groups and ensure that the system performs equally well for all pedestrians, drivers, and passengers, regardless of race, gender, or disability.

Additionally, continuous monitoring and testing of AI systems for fairness and bias should be a routine part of the development process. Developers should use ethical testing practices to identify and correct any discrepancies in how the AI handles different situations.

3.3 Transparency and Explainable AI

AI in autonomous vehicles should be explainable, meaning that the reasoning behind its decisions can be understood by humans. This is especially important in situations where the vehicle has to make difficult ethical choices, such as deciding whom to harm in an unavoidable accident.

Developers should focus on making AI systems transparent by designing models that allow human operators to understand and validate the decision-making processes of the vehicle. This could involve providing a detailed explanation of the factors that influenced the decision, including the vehicle’s surroundings, sensor data, and ethical considerations.

3.4 Developing Clear Accountability Models

Clear guidelines are necessary to determine liability and accountability in the case of accidents involving autonomous vehicles. Policymakers should collaborate with legal experts to establish frameworks that assign responsibility for accidents, whether it’s the car manufacturer, software developers, or the vehicle owner.

For example, there should be clear regulations about data collection and maintenance, ensuring that data logs are stored and accessible in the event of an incident. These logs can help determine the root cause of the problem and clarify who is responsible for the vehicle’s actions.

4. The Road Ahead: Trusting Driverless Cars

As autonomous vehicles become more advanced and widespread, public trust will play a significant role in their adoption. To ensure that society can trust driverless cars, the development of ethical AI systems and clear accountability mechanisms is crucial. Governments, industry leaders, and the public need to collaborate to address the challenges posed by AI ethics in autonomous vehicles.

Education and awareness are also key. People need to be informed about how AI in autonomous vehicles works, how ethical decisions are made, and how the systems are being developed and tested for fairness and transparency. Open discussions and debates on AI ethics will help foster trust and ensure that autonomous vehicles are integrated into society in a way that is both safe and ethical.

Conclusion

AI in autonomous vehicles presents a host of exciting opportunities for the future of transportation, but it also brings with it significant ethical challenges. By addressing issues such as moral decision-making, bias, transparency, and accountability, we can ensure that autonomous vehicles operate fairly, responsibly, and safely. The development of ethical AI frameworks, diverse data sets, and transparent systems will be key in fostering public trust and ensuring that driverless cars live up to their potential as a transformative technology. Only through collaboration between developers, policymakers, and the public can we ensure that AI in autonomous vehicles is used in a way that benefits society as a whole.

Discussion about this post