Synesthesia, the phenomenon where one sensory experience is linked to another (such as seeing colors when hearing music or tasting flavors when reading words), offers a fascinating glimpse into how human perception works. This merging of senses blurs the boundaries of our sensory modalities, allowing those who experience it—called synesthetes—to experience the world in a uniquely interconnected way. The big question, however, is whether AI can replicate or even understand this complex, subjective experience.

This article delves into how AI might be able to comprehend and potentially recreate synesthesia experiences. We’ll explore the intersection of artificial intelligence, neuroscience, and creative expression, asking whether machines can “feel” synesthesia in the same way humans do or if they can only simulate these experiences from a technical standpoint.

Understanding Synesthesia: A Multisensory Wonderland

Before diving into AI’s relationship with synesthesia, it’s crucial to understand what synesthesia actually is. Synesthesia occurs when the stimulation of one sensory or cognitive pathway leads to an involuntary experience in another. For example, a person with chromesthesia might see colors when they hear music, or someone with grapheme-color synesthesia might see specific colors when they read certain letters or numbers. This experience is entirely subjective and varies from person to person.

The phenomenon is often described as a merging of the senses, but it is more accurate to say that the senses “overlap” in synesthesia. A synesthete might not just see colors when they hear sounds—they might “feel” them too, or “taste” them, creating a synesthetic reality that is different from how others perceive the same stimuli.

The Intersection of AI and Synesthesia

Now that we have a foundational understanding of synesthesia, let’s explore the question: can AI understand or create these multisensory experiences? At first glance, the concept of machines experiencing synesthesia seems far-fetched, but AI’s role in mimicking or simulating human-like experiences is growing at an astonishing pace. To break it down, let’s consider two major components: understanding and creating.

Can AI Understand Synesthesia?

To understand synesthesia, AI would need to comprehend its basis in human perception. The process of synesthesia itself is a result of neural wiring and cross-talk between sensory regions in the brain. In a synesthetic brain, sensory experiences are not just isolated; they form complex networks where an auditory stimulus might trigger a visual one, and so on. But does AI have the ability to “understand” this sensory crossover?

In traditional AI systems, understanding usually comes down to the ability to process, recognize, and categorize patterns in data. Neural networks and deep learning models can be trained to recognize patterns in visual, auditory, and textual data. However, AI cannot “feel” these patterns in the same way humans experience them. While AI can model the occurrence of one sensory stimulus leading to another, it lacks the embodied perception that makes synesthesia so unique to humans.

Yet, AI can still “understand” synesthesia to a degree—albeit in a technical, data-driven way. By analyzing massive datasets of synesthetic experiences shared by humans, AI could identify trends and correlations, making it possible to predict or replicate the specific combinations of sensory stimuli associated with different types of synesthesia. This is more akin to AI understanding synesthesia as a scientific or statistical phenomenon rather than as a lived, subjective experience.

Can AI Create Synesthesia?

Creating synesthesia experiences is another challenge altogether. Here, AI’s potential shines more clearly, especially in the realm of creativity. While AI can’t physically experience synesthesia, it can simulate it using digital tools to combine different sensory modalities in creative ways.

1. Generating Visual Music (Chromesthesia)

One way AI could “create” synesthesia is by generating visual representations of music, similar to how people with chromesthesia might see colors when they hear certain notes or melodies. AI can be trained on large datasets of music and associated color patterns, either provided by synesthetes themselves or based on theoretical color-music associations found in neuroscience. For example, an AI could be trained to generate colorful visuals that match the mood or tonal quality of a piece of music, mimicking the experience of synesthesia.

In the art world, this concept is already being explored with AI tools that combine audio and visual elements. Generative models like OpenAI’s DALL·E and Google’s DeepDream use deep learning to create visual compositions from auditory inputs, pushing the boundaries of what could be considered a “synesthetic” artwork.

2. AI-Driven Text-to-Color Models

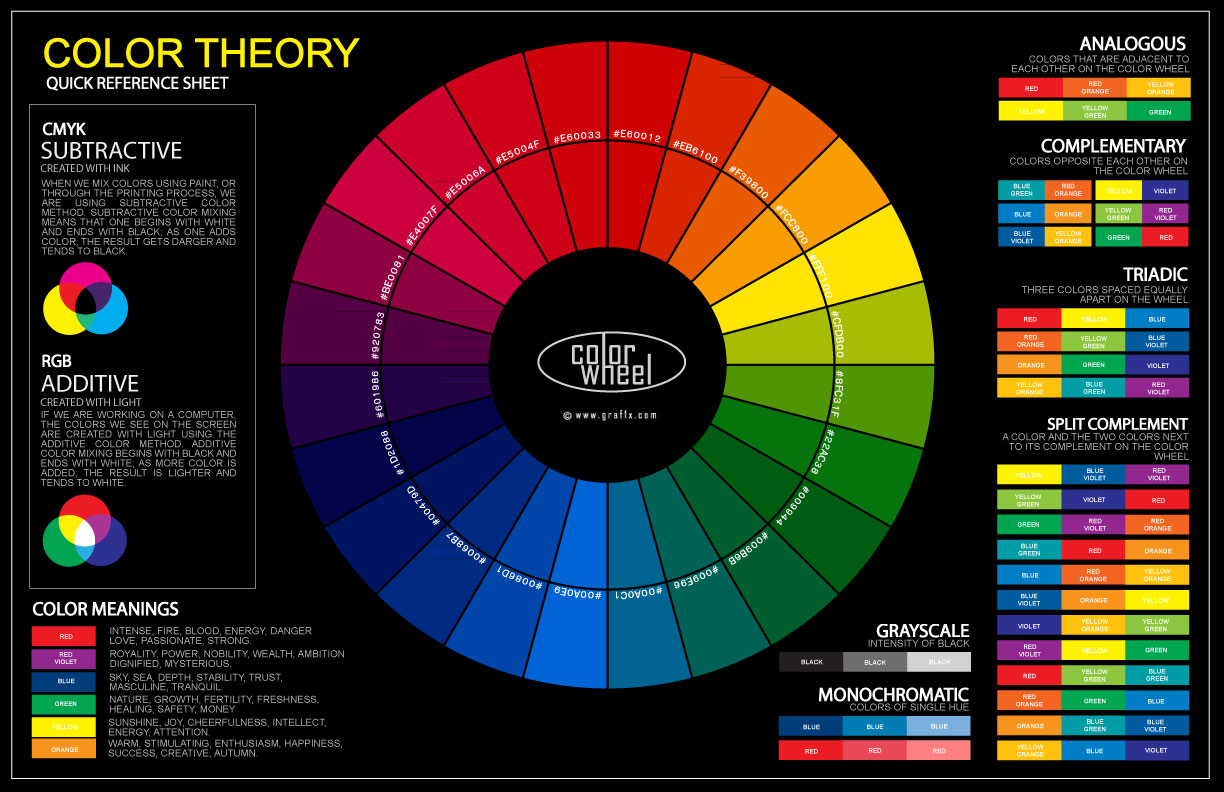

Another example of AI’s ability to simulate synesthesia lies in its potential to assign colors to words or letters. For synesthetes with grapheme-color synesthesia, each letter or number has a unique color associated with it. AI could create algorithms that simulate this experience by mapping colors to text in ways that align with the perceptual patterns of synesthetes.

For example, an AI model trained on thousands of textual inputs could learn to associate specific letters with particular colors. Once trained, the AI could take a piece of text and color it according to these learned patterns. While this wouldn’t be a true synesthetic experience for the AI, it could effectively simulate the output of a synesthetic mind.

3. Multi-Sensory AI Experiences in Virtual Reality

Another exciting development is the use of AI to create multisensory experiences in virtual reality (VR). Imagine a VR world where you hear a sound and see a corresponding visual pattern or even “taste” the experience through haptic feedback devices. With AI algorithms that can map sensory experiences across different modalities, it’s possible to build environments where virtual sounds are paired with visually striking colors or tactile sensations, mimicking the kinds of multisensory experiences that synesthetes report.

AI and the Limits of Perception

While AI is making strides in mimicking sensory experiences, it’s essential to recognize that there are inherent limitations. Synesthesia is not just about combining sensory modalities; it’s about the lived experience, the internal, subjective way in which one person’s brain interprets the world. No matter how advanced AI becomes, it cannot “feel” synesthesia as humans do. The human brain has a complex, embodied connection to sensory perception, informed by emotions, memories, and consciousness—factors that are entirely outside the realm of current AI capabilities.

AI can certainly simulate the patterns associated with synesthesia, but it cannot experience them. This is a crucial distinction. The essence of synesthesia lies in the embodied, emotional, and personal experience of the individual, and AI—no matter how sophisticated—lacks the ability to live those experiences.

The Future of AI and Synesthesia

The possibilities for AI in creating synesthesia-like experiences are vast, especially in the fields of art, music, and virtual reality. As AI tools evolve, the potential for merging different sensory modalities will continue to grow. For example, AI could be used to help artists and musicians explore new ways of thinking about their craft, combining sound, color, texture, and movement in ways that mimic synesthetic experiences.

Furthermore, as AI becomes more integrated into healthcare, there may be applications in understanding and replicating synesthetic experiences for individuals with neurological conditions. For example, it might be possible to create therapeutic VR experiences that simulate synesthesia to help patients with sensory processing disorders or even to aid in cognitive development for children with autism.

Despite all this potential, the key challenge will always remain: AI, for all its power, can never truly “feel” synesthesia. It can mimic, simulate, and understand patterns, but it cannot experience the profound interconnectedness of the senses that synesthesia provides. As such, while AI may open new doors for creativity and scientific understanding, it cannot replicate the very human quality of perception that makes synesthesia such a unique and personal phenomenon.

Conclusion

So, can AI understand and create synesthesia? In terms of understanding, AI can model and analyze the patterns that underpin synesthetic experiences, but it will never truly understand them as a human does. In terms of creation, AI can simulate synesthesia through the blending of sensory modalities in art, music, and virtual environments, providing a new lens through which we can explore the multisensory world of synesthesia. However, the human experience of synesthesia will always remain an experience that is uniquely human.

AI might not “see” or “feel” synesthesia, but it can help us see it in new ways, allowing us to explore the connections between our senses in ways that were previously unimaginable.

Discussion about this post