Decoding implies uncovering hidden signals. But human emotions are complex, involving:

- Physiological responses: heart rate, hormone changes, and sweat

- Behavioral expressions: facial gestures, posture, voice tone

- Cognitive interpretations: thoughts, memories, and expectations

- Social context: culture and interpersonal dynamics

Emotional AI often relies on observable signals—like smiles or vocal changes—as proxies. Yet signals can be misleading: a smile might signal joy, discomfort, or sarcasm.

2. The Rise of Affective Computing

Affective computing enables machines to detect, interpret, and respond to human emotions.

Why Emotions Matter in AI

Humans are not rational calculators. Emotions like frustration, boredom, or excitement affect how we interact with computers. Emotion recognition AI aims to:

- Adapt learning platforms to student engagement

- Detect emotional distress in healthcare apps

- Improve virtual assistants using AI empathy

From Concept to Reality

Advances in machine learning, deep learning, and sensors have turned emotional AI from theory into practical tools for real-world applications.

3. How AI Reads Human Emotions

Emotional AI analyzes measurable signals that correlate with emotions. Key approaches include:

Facial Expression Analysis

AI uses computer vision to detect micro-expressions. Machine learning models learn patterns of happiness, anger, sadness, surprise, fear, and disgust.

Pros: real-time, non-invasive

Cons: cultural differences, intentional masking

Voice and Speech Analysis

Voice features—pitch, tempo, volume—offer insights into emotional states. NLP analyzes emotional tone in speech and text.

Challenges: accents, background noise, personal speaking style

Text and Sentiment Analysis

AI can detect emotion in written text using NLP. Emotion recognition AI evaluates sentiment and tone in messages, emails, and social media posts.

Limitations: sarcasm, humor, idioms, and cultural context

Physiological Signals

Wearables track heart rate, skin conductance, breathing, and sleep. Machine learning models correlate these with stress or arousal.

Privacy is crucial, as physiological data is sensitive.

Multimodal Emotion Recognition

The most advanced emotional AI combines face, voice, text, and physiological data for accurate emotion detection.

4. Machine Learning in Emotional AI

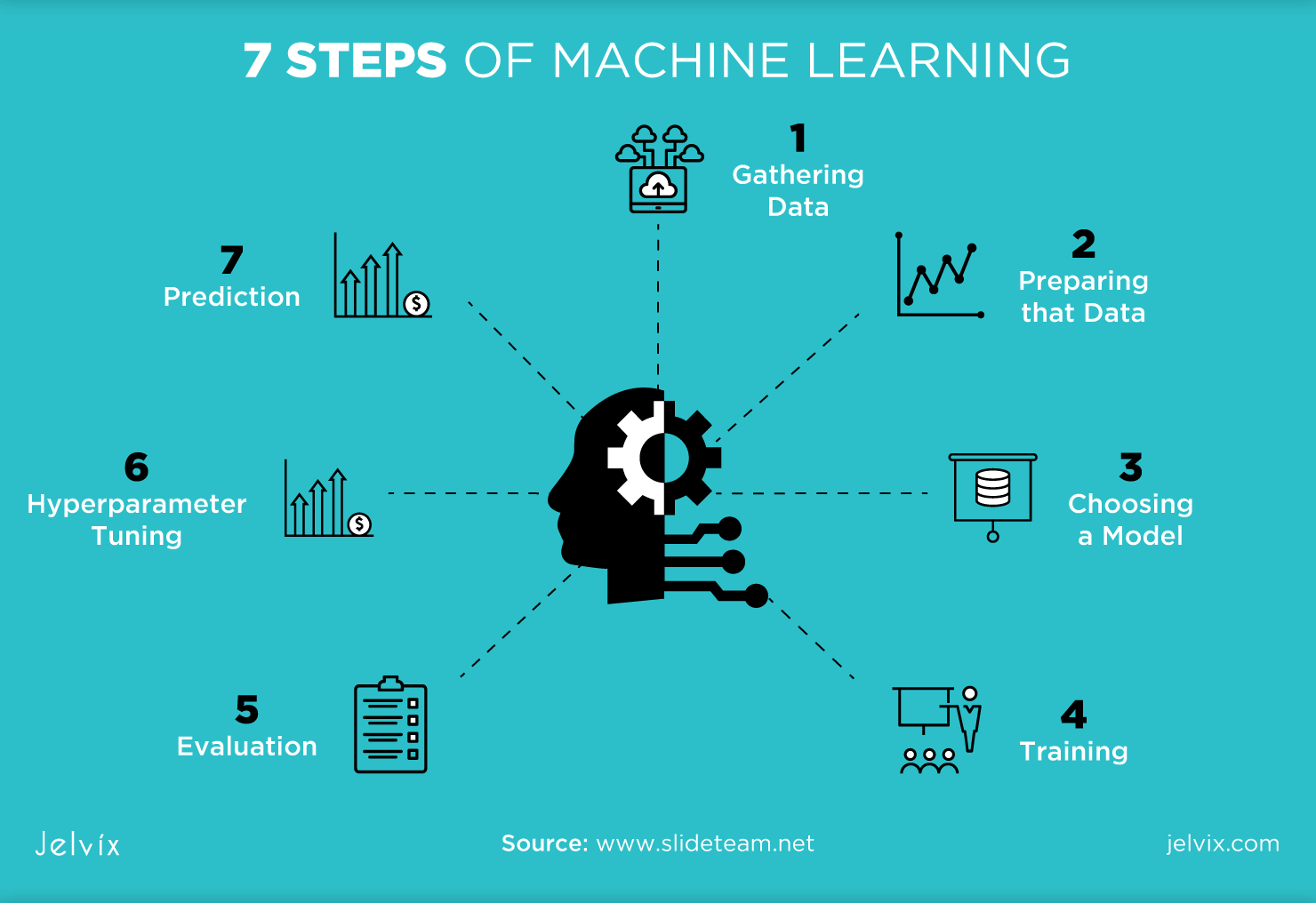

Machine learning powers emotion recognition AI. Models learn patterns from labeled datasets.

Emotional Labels and Challenges

AI typically uses discrete emotions: happiness, sadness, anger. But real human emotions are nuanced, mixed, and evolving.

- Continuous models track arousal and valence, providing more subtle emotion detection

- Labels can introduce bias and oversimplification

5. Applications of Emotional AI

Customer Service & Marketing

- Detect frustration or satisfaction

- Chatbots respond using AI empathy

- Analyze reactions to ads

Education

- Track student engagement and boredom

- Adapt lessons in real-time with emotion recognition AI

Mental Health & Wellbeing

- Early detection of depression or anxiety

- Support therapy via wearable and speech-based emotional AI tools

Social Robots & Companions

- Build trust in elder care and therapy

- Simulate empathy without real emotions

Entertainment & Gaming

- Adapt stories or difficulty based on player emotion

- Increase immersion using multimodal emotion recognition

6. Limitations: Can AI Truly Feel Emotions?

No. Emotional AI detects patterns but does not experience emotions.

- Simulates empathy, but lacks consciousness

- Maps inputs to outputs probabilistically

- Cannot grasp the personal meaning behind feelings

Even so, simulated empathy can be useful in mental health, education, and social robotics.

7. Cultural and Individual Differences

- Cultural differences affect expression: eye contact, smiling, and tone vary

- Individuals express emotions differently: some are expressive, others reserved

- Emotional AI must adapt to these variations

8. Bias and Fairness in Emotional AI

- Biased training data affects accuracy

- Misclassification risks harm in hiring, healthcare, or security

- Ethical AI design requires fairness, transparency, and inclusion

9. Privacy Concerns

- Emotional data is deeply personal

- Cannot be “reset” like passwords

- Consent and transparency are essential

10. Ethical Boundaries

- Emotion-aware AI could manipulate users

- Oversimplifying emotions risks diminishing human dignity

- Ethical emotion recognition AI must respect autonomy

11. The Future of Emotional AI

Context-Aware Systems

- Integrate environment, history, and social dynamics

Human-AI Collaboration

- Augment human insight, do not replace judgment

Regulation and Standards

- Guidelines, accountability, and ethical frameworks are essential

12. A Mirror, Not a Mind

AI can detect and respond to emotions, but it does not feel. Emotional AI reflects our inner world, providing insights—but it cannot share our experience.

The key question: are we ready to decide how, when, and why AI should decode human emotions?

Discussion about this post